Opster Team

Before you begin reading this guide, we recommend you run Elasticsearch Error Check-Up which analyzes 2 JSON files to detect many errors.

Briefly, this error message indicates that Elasticsearch was unable to estimate the memory overhead for a particular operation. The reason for this error could be that the Elasticsearch instance does not have enough memory available. To resolve the issue, the Elasticsearch instance should be configured with enough memory to handle the operation.

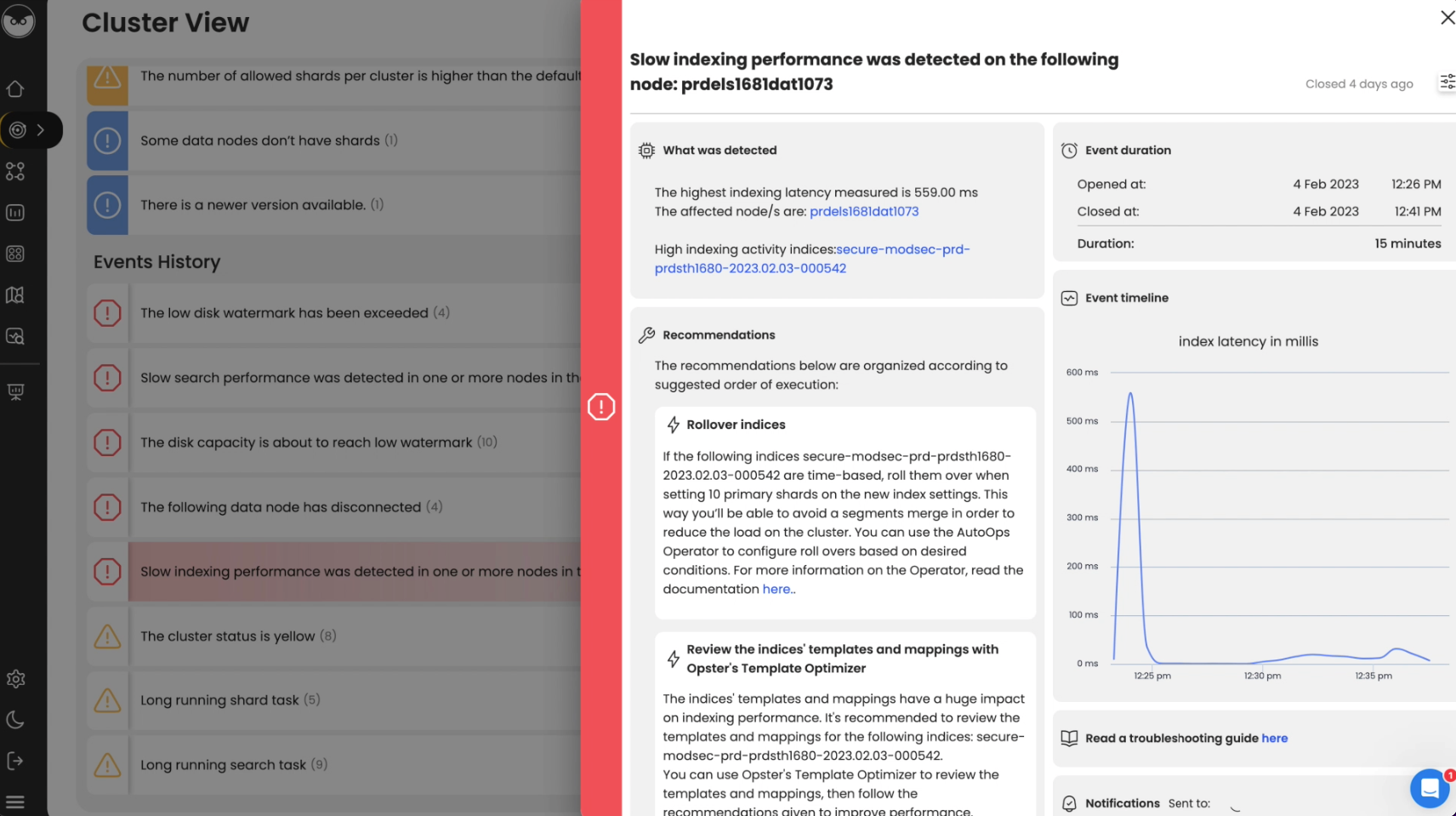

To easily locate the root cause and resolve this issue try AutoOps for Elasticsearch & OpenSearch. It diagnoses problems by analyzing hundreds of metrics collected by a lightweight agent and offers guidance for resolving them. Take a self-guided product tour to see for yourself (no registration required).

This guide will help you check for common problems that cause the log ” Unable to estimate memory overhead ” to appear. To understand the issues related to this log, read the explanation below about the following Elasticsearch concepts: fielddata, index and memory.

Overview

In Elasticsearch the term fielddata is relevant when sorting and doing aggregations (similar to SQL GROUP BY COUNT and AVERAGE functions) on text fields.

For performance reasons, there are some rules as to the kinds of fields that can be aggregated. You can group by any numeric field but for text fields, which have to be of keyword type or have fielddata=true since they don’t support doc_values (Doc values are the on-disk inverted index data structure, built at document indexing time, which makes aggregations possible).

Fielddata is an in-memory data structure used by text fields for the same purpose. Since it uses a lot of heap size it is disabled by default.

Examples

The following PUT mapping API call will enable Fielddata on my_field text field.

PUT my_index/_mapping{"properties":{"my_field":{"type":"text","fielddata":true}}}Notes

- As field-data is disabled by default on text fields, in case of an attempt to aggregate on a text field with field-data disabled, you would get the following error message:

“Fielddata is disabled on text fields by default. Set `fielddata=true` on [`your_field_name`] in order to load field data in memory by uninverting the inverted index. Note that this can however, use “significant memory.” – if this happens you can either enable the field-data on that text field, or choose another way to query the data (again, because field-data consumes a lot of memory and is not recommended).

Index and indexing in Elasticsearch - 3 min

Overview

In Elasticsearch, an index (plural: indices) contains a schema and can have one or more shards and replicas. An Elasticsearch index is divided into shards and each shard is an instance of a Lucene index.

Indices are used to store the documents in dedicated data structures corresponding to the data type of fields. For example, text fields are stored inside an inverted index whereas numeric and geo fields are stored inside BKD trees.

Examples

Create index

The following example is based on Elasticsearch version 5.x onwards. An index with two shards, each having one replica will be created with the name test_index1

PUT /test_index1?pretty

{

"settings" : {

"number_of_shards" : 2,

"number_of_replicas" : 1

},

"mappings" : {

"properties" : {

"tags" : { "type" : "keyword" },

"updated_at" : { "type" : "date" }

}

}

}List indices

All the index names and their basic information can be retrieved using the following command:

GET _cat/indices?v

Index a document

Let’s add a document in the index with the command below:

PUT test_index1/_doc/1

{

"tags": [

"opster",

"elasticsearch"

],

"date": "01-01-2020"

}Query an index

GET test_index1/_search

{

"query": {

"match_all": {}

}

}Query multiple indices

It is possible to search multiple indices with a single request. If it is a raw HTTP request, index names should be sent in comma-separated format, as shown in the example below, and in the case of a query via a programming language client such as python or Java, index names are to be sent in a list format.

GET test_index1,test_index2/_search

Delete indices

DELETE test_index1

Common problems

- It is good practice to define the settings and mapping of an Index wherever possible because if this is not done, Elasticsearch tries to automatically guess the data type of fields at the time of indexing. This automatic process may have disadvantages, such as mapping conflicts, duplicate data and incorrect data types being set in the index. If the fields are not known in advance, it’s better to use dynamic index templates.

- Elasticsearch supports wildcard patterns in Index names, which sometimes aids with querying multiple indices, but can also be very destructive too. For example, It is possible to delete all the indices in a single command using the following commands:

DELETE /*

To disable this, you can add the following lines in the elasticsearch.yml:

action.destructive_requires_name: true

Log Context

Log “Unable to estimate memory overhead” classname is PagedBytesIndexFieldData.java.

We extracted the following from Elasticsearch source code for those seeking an in-depth context :

}

long totalBytes = totalTermBytes + (2 * terms.size()) + (4 * terms.getSumDocFreq());

return totalBytes;

}

} catch (Exception e) {

logger.warn("Unable to estimate memory overhead"; e);

}

return 0;

}

/**

Find & fix Elasticsearch problems

Opster AutoOps diagnoses & fixes issues in Elasticsearch based on analyzing hundreds of metrics.

Fix Your Cluster IssuesConnect in under 2 minutes

Lourens Rozema

CTO at Omnidots